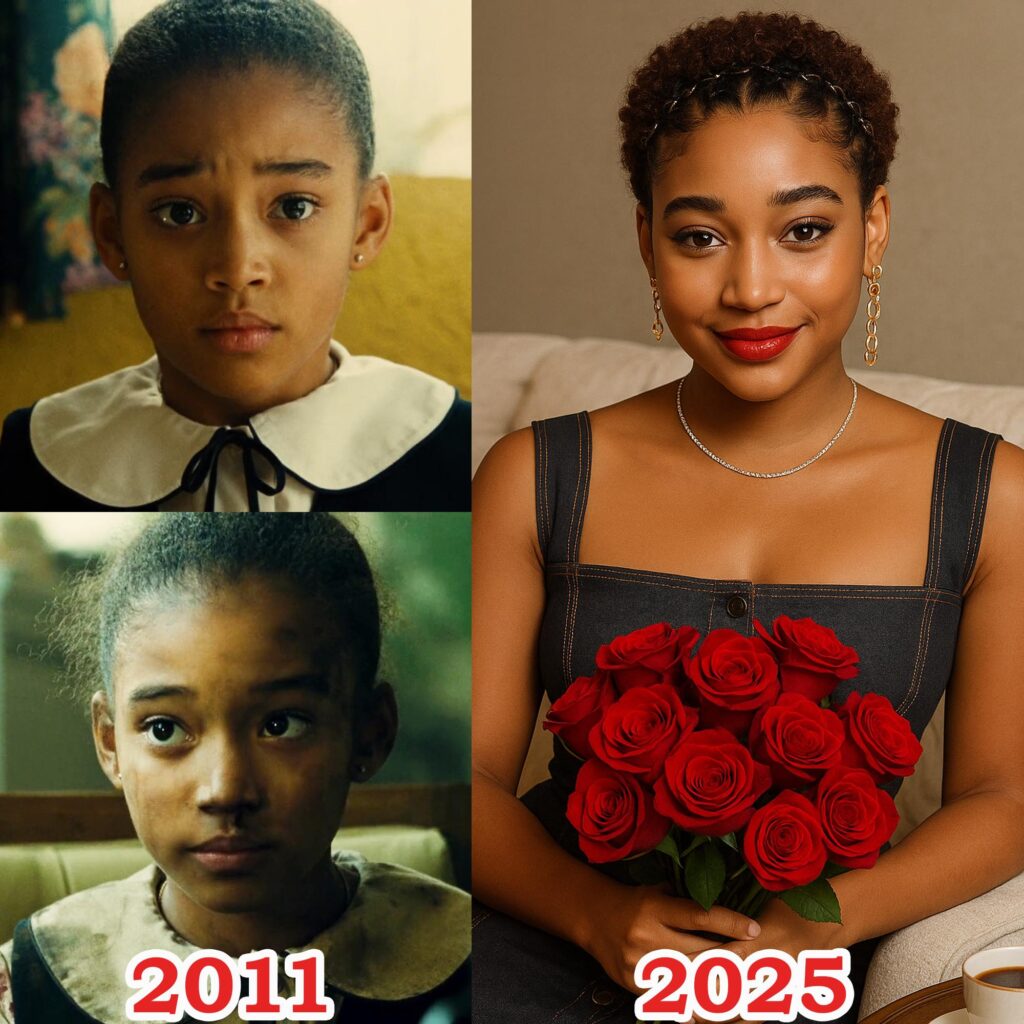

L’actrice Nikechuè : un jeune talent prometteur

Ces dernières années

le nom Nikechuè est devenu de plus en plus familier aux amateurs de cinéma africain. Dotée d’une beauté unique, d’un regard expressif et d’un jeu naturel, l’actrice Nikechuè s’est rapidement imposée à travers une série de rôles marquants.

Voyage sous les feux de la rampe

Nikechuè a commencé sa carrière avec de petits rôles secondaires dans des séries télévisées locales. Grâce à ses efforts constants et à son esprit progressiste, elle a progressivement affirmé son nom, devenant l’une des jeunes actrices les plus recherchées aujourd’hui.

L’attrait d’un style de vie positif

Non seulement elle se démarque à l’écran, mais l’actrice Nikechuè inspire également par son style de vie positif et ses messages destinés aux jeunes femmes. Elle partage régulièrement sur les réseaux sociaux son parcours de dépassement des difficultés pour poursuivre sa passion pour l’art, attirant des centaines de milliers de followers

L’actrice Nikechuè témoigne du talent, du dynamisme et de l’attrait durable d’une génération de jeunes acteurs africains. Avec sa carrière montante et son immense base de fans, elle ira certainement plus loin sur la carte du cinéma international.